Hello everyone! In this video I explored the world of large language models, with a particular focus on the state of GPT models. If you haven't had a chance to watch it yet, I highly recommend doing so. But for those who prefer reading, or simply want a recap, this blog post is for you. If you are liked the video and want to watch a detailed talk from one of the top leaders in AI, Andrej Karpathi, check out his talk at Microsoft’s Build summit.

Introduction to Large Language Models and the State of GPT Models

Large language models, like GPT, are revolutionizing the way we interact with technology. These models are designed to understand and generate human-like text, opening up a world of possibilities for applications in various fields such as customer service, content creation, and even healthcare.

GPT, which stands for Generative Pretrained Transformer, is a series of models developed by OpenAI. As of now, the latest iteration is GPT-3, a behemoth model with 175 billion parameters. But what makes these models truly remarkable is not just their size, but the way they are trained.

How GPT Models Work and Their Limitations

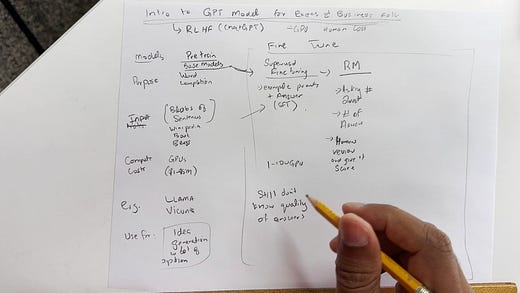

The training process of GPT models involves four major stages: pretraining, supervised fine-tuning, reward modeling, and reinforcement learning. The pretraining stage is where most of the computational work happens, involving thousands of GPUs in a supercomputer. This stage involves gathering a large amount of data from sources like CommonCrawl, C4, and high-quality datasets like GitHub and Wikipedia. This data is mixed together and sampled to form the training set for GPT.

However, it's important to note that these models are not without their limitations. For instance, they can sometimes generate incorrect or nonsensical responses. They also lack the ability to understand context beyond the input they are given, and they can't access real-time or personal data unless it has been shared with them during the conversation. Moreover, they can sometimes exhibit biases, which are a reflection of the biases present in the data they were trained on.

Incorporating Human Feedback into Model Design

One of the ways OpenAI is working to improve these models is by incorporating human feedback into the model design. This is done in the later stages of training, where human evaluators review and rate possible model outputs for a range of example inputs. The model then generalizes from this feedback to respond to a wide array of inputs from users.

This process helps in aligning the model's behavior with human values and making it more useful and safe. However, it's a challenging task as it requires careful design of the feedback process and ongoing iteration based on what is learned.

I hope this blog post has given you a deeper understanding of large language models and the state of GPT models. Stay tuned for more insights and don't forget to check out the video for a more detailed explanation.

Share this post