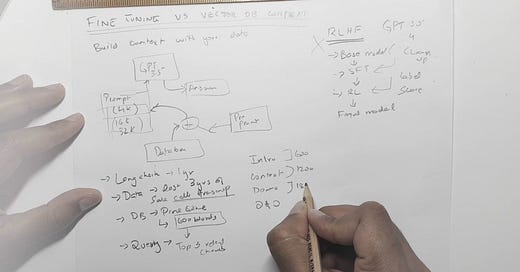

Hey everyone! Today, we're diving deep into two powerful strategies for maximizing the capabilities of large language models: Fine-Tuning and Vector Database Search. Why choose one over the other? Let's break it down.

🔍 Fine-Tuning: What's the Deal?

What it is: Fine-tuning means training a pre-trained model like GPT-3.5 Turbo on a specific set of data to optimize for a certain task.

Pros:

✅Tailor-made solutions

✅Better alignment with your use-case

✅Highly accurate

Cons:

❌Costly in terms of computing power

❌Time-consuming

❌Risk of overfitting

🔭 Vector Database Search: An Alternative Approach

What it is: Uses a database of embeddings to search for the most relevant conversations, then inserts them into the model's context window.

Pros:

✅Quick to implement

✅Low computational cost

✅Easily scalable

Cons:

❌Less accuracy

❌Not tailored for specialized tasks

❌May lack coherence

🆕 OpenAI's Latest: Fine-Tuning for GPT-3.5 Turbo

OpenAI has recently launched fine-tuning capabilities specifically for GPT-3.5 Turbo. This is a game-changer and here's why:

🎯 Directly targets your business needs

💡 Allows for incremental updates

💰 Though it might be an initial investment, it's a long-term win

🎲 Final Thoughts

For businesses looking to integrate cutting-edge AI, I'd bet on fine-tuning. While both approaches have merits, fine-tuning gives you a highly specialized model tailored to your specific needs.

Share this post